Optimizing Performance

Innovative does not provide end-user support for creating SQL queries. Sierra Direct SQL Access users assume responsibility for the effects of custom SQL. Poorly constructed queries can compromise system performance.

The following tips help you ensure optimal system performance:

Optimizing Reports on Material Type and Bib Level

Given that material type and bib level are stored in different BCODE columns for different sites, Innovative has explicitly (and redundantly) stored these values in dedicated tables to make writing reports clearer.

To access bib record numbers, their material type codes and material type descriptions, you could use the following SQL (assuming your site defines material type with BCODE2):

SELECT b.record_num AS "bib_record_number", b.bcode2 AS "material_type_code", u.name AS "material_type_description" FROM sierra_view.bib_view b JOIN sierra_view.user_defined_bcode2_myuser u ON b.bcode2 = u.code LIMIT 100

Instead, you should use the dedicated material_property_myuser view, joined through the bib_record_property view where the material_code is explicitly stored:

SELECT b.record_num AS "bib_record_number", brp.material_code AS "material_type_code", m.name AS "material_type_description" FROM sierra_view.bib_view b JOIN sierra_view.bib_record_property brp ON brp.bib_record_id = b.id JOIN sierra_view.material_property_myuser m ON brp.material_code = m.code LIMIT 100

Similarly for bib_level:

SELECT b.record_num AS "bib_record_number", brp.bib_level_code AS "bib_level_code", bl.name AS "bib_level_description" FROM sierra_view.bib_view b JOIN sierra_view.bib_record_property brp ON brp.bib_record_id = b.id JOIN sierra_view.bib_level_property_myuser bl ON brp.bib_level_code = bl.code LIMIT 100

How to Ensure that Your Queries Are Well-tuned

The default system timeout for a Sierra Direct SQL Access query is 10 minutes.

SQL queries can be written in a variety of ways to achieve the same result. Some statements are dramatically more efficient than others. Inefficient statements might take hours (or even days!) to complete. Worse, they can seriously degrade the performance of the production Sierra application.

Like most relational databases, PostgreSQL supports the EXPLAIN command, which "explains" how the database executes the SQL statement. PostgreSQL regularly analyzes the structure of the database (tables, indexes, index types, and so forth) as well as the contents of the database, such as how many rows each table has. It determines an optimal plan for executing the SQL statement by comparing the "cost" (measured in the number of 8K pages of I/O) of the various options. It will use the lowest cost plan and present that plan in response to the EXPLAIN command.

The following SQL sample includes the EXPLAIN command before the query:

-- -- list bibs with more than x items -- EXPLAIN SELECT b.record_num AS "bib_record", date(b.cataloging_date_gmt) AS "bib_cataloged_dt", b.title AS "bib_title", count(l.id) AS "item_count" FROM sierra_view.bib_view b JOIN sierra_view.bib_record_item_record_link l ON l.bib_record_id = b.id GROUP BY 1, 2, 3 HAVING count(l.id) > 100 ORDER BY 4 DESC

PostgreSQL returns the following plan:

QUERY PLAN

-----------------------------------------------------------------------------------------------------------------------------------------------------

Sort (cost=168530.75..168534.81 rows=1623 width=53)

Sort Key: (count(bib_record_item_record_link.id))

-> HashAggregate (cost=168419.86..168444.21 rows=1623 width=53)

Filter: (count(bib_record_item_record_link.id) > 100)

-> Nested Loop (cost=75459.66..168399.58 rows=1623 width=53)

-> Merge Join (cost=75459.66..167643.59 rows=541 width=53)

Merge Cond: (md.id = br.record_id)

-> Merge Join (cost=60430.93..209623.84 rows=546 width=53)

Merge Cond: (md.id = brp.bib_record_id)

-> Index Scan using pk_record_id on record_metadata md (cost=0.00..604517.90 rows=5442 width=12)

Filter: (((record_type = 'b'::bpchar) AND (char_length((campus_code)::text) = 0)) OR (campus_code IS NULL))

-> Index Scan using idx_bib_record_property__bib_record_id on bib_record_property brp (cost=0.00..38850.44 rows=588982 width=41)

-> Index Scan using bib_record_record_key on bib_record br (cost=0.00..63943.76 rows=584471 width=24)

-> Index Scan using uc_bib_record_item_record_link on bib_record_item_record_link (cost=0.00..1.24 rows=12 width=16)

Index Cond: (bib_record_id = br.id)

This query is quite expensive at 168,534 "8K pages of I/O".

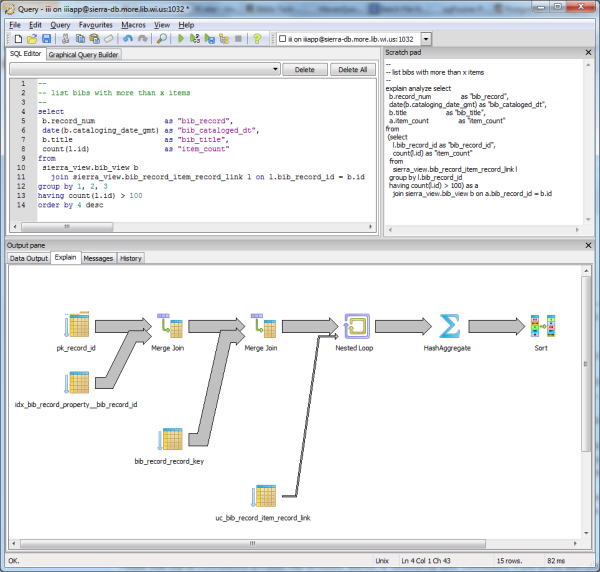

PgAdmin III's Explain tab provides a graphical representation of the plan:

You can add EXPLAIN ANALYZE to a query to get the actual run timing:

--

-- list bibs with more than x items

--

EXPLAIN ANALYZE SELECT

b.record_num AS "bib_record",

date(b.cataloging_date_gmt) AS "bib_cataloged_dt",

b.title AS "bib_title",

count(l.id) AS "item_count"

FROM

sierra_view.bib_view b

JOIN sierra_view.bib_record_item_record_link l ON l.bib_record_id = b.id

GROUP BY 1, 2, 3

HAVING count(l.id) > 100

ORDER BY 4 DESC

PostgreSQL returns the following plan:

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------------------------------------------

Sort (cost=168530.75..168534.81 rows=1623 width=53) (actual time=23819.456..23819.497 rows=233 loops=1)

Sort Key: (count(bib_record_item_record_link.id))

Sort Method: quicksort Memory: 45kB

-> HashAggregate (cost=168419.86..168444.21 rows=1623 width=53) (actual time=23500.412..23819.018 rows=233 loops=1)

Filter: (count(bib_record_item_record_link.id) > 100)

-> Nested Loop (cost=75459.66..168399.58 rows=1623 width=53) (actual time=628.412..21255.439 rows=1752105 loops=1)

-> Merge Join (cost=75459.66..167643.59 rows=541 width=53) (actual time=628.375..13420.045 rows=588467 loops=1)

Merge Cond: (md.id = br.record_id)

-> Merge Join (cost=60430.93..209623.84 rows=546 width=53) (actual time=628.357..11540.587 rows=588467 loops=1)

Merge Cond: (md.id = brp.bib_record_id)

-> Index Scan using pk_record_id on record_metadata md (cost=0.00..604517.90 rows=5442 width=12) (actual time=628.318..9858.445 rows=1074590 loops=1)

Filter: (((record_type = 'b'::bpchar) AND (char_length((campus_code)::text) = 0)) OR (campus_code IS NULL))

-> Index Scan using idx_bib_record_property__bib_record_id on bib_record_property brp (cost=0.00..38850.44 rows=588982 width=41) (actual time=0.023..800.874 rows=588467 loops=1)

-> Index Scan using bib_record_record_key on bib_record br (cost=0.00..63943.76 rows=584471 width=24) (actual time=0.012..1070.222 rows=588467 loops=1)

-> Index Scan using uc_bib_record_item_record_link on bib_record_item_record_link (cost=0.00..1.24 rows=12 width=16) (actual time=0.005..0.010 rows=3 loops=588467)

Index Cond: (bib_record_id = br.id)

Total runtime: 23819.693 ms

An alternative SQL statement that produces the same result might be more efficient:

--

-- list bibs with more than x items

--

EXPLAIN ANALYZE SELECT

b.record_num AS "bib_record",

date(b.cataloging_date_gmt) AS "bib_cataloged_dt",

b.title AS "bib_title",

a.item_count AS "item_count"

FROM

(SELECT

l.bib_record_id AS "bib_record_id",

count(l.id) AS "item_count"

FROM

sierra_view.bib_record_item_record_link l

GROUP BY l.bib_record_id

HAVING count(l.id) > 100) AS a

JOIN sierra_view.bib_view b ON a.bib_record_id = b.id

PostgreSQL returns the following plan:

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------------------------------------------

Merge Join (cost=207567.02..235100.81 rows=140 width=53) (actual time=3294.564..6361.711 rows=233 loops=1)

Merge Cond: (md.id = br.record_id)

-> Merge Join (cost=60430.93..209623.84 rows=546 width=53) (actual time=605.104..3639.233 rows=571278 loops=1)

Merge Cond: (md.id = brp.bib_record_id)

-> Index Scan using pk_record_id on record_metadata md (cost=0.00..604517.90 rows=5442 width=12) (actual time=605.065..2356.296 rows=1056815 loops=1)

Filter: (((record_type = 'b'::bpchar) AND (char_length((campus_code)::text) = 0)) OR (campus_code IS NULL))

-> Index Scan using idx_bib_record_property__bib_record_id on bib_record_property brp (cost=0.00..38850.44 rows=588982 width=41) (actual time=0.021..646.761 rows=571278 loops=1)

-> Sort (cost=132107.36..132484.78 rows=150967 width=24) (actual time=2639.468..2639.595 rows=233 loops=1)

Sort Key: br.record_id

Sort Method: quicksort Memory: 43kB

-> Hash Join (cost=107238.06..119121.28 rows=150967 width=24) (actual time=2340.560..2639.222 rows=233 loops=1)

Hash Cond: (a.bib_record_id = br.id)

-> Subquery Scan on a (cost=79925.46..83322.21 rows=150967 width=16) (actual time=1800.379..2033.996 rows=233 loops=1)

-> HashAggregate (cost=79925.46..81812.54 rows=150967 width=16) (actual time=1800.377..2033.845 rows=233 loops=1)

Filter: (count(bib_record_item_record_link.id) > 100)

-> Seq Scan on bib_record_item_record_link (cost=0.00..66773.69 rows=1753569 width=16) (actual time=0.013..448.341 rows=1752106 loops=1)

-> Hash (cost=16581.71..16581.71 rows=584471 width=24) (actual time=540.008..540.008 rows=588467 loops=1)

Buckets: 32768 Batches: 2 Memory Usage: 16086kB

-> Seq Scan on bib_record br (cost=0.00..16581.71 rows=584471 width=24) (actual time=0.018..312.221 rows=588467 loops=1)

Total runtime: 6368.756 ms

The cost has increased to 235,100 8K pages, yet the resulting runtime has dropped from 24 seconds to 6.3 seconds. (Even when run repeatedly!) Obviously, there is much more to runtime performance than I/O alone (on which cost is solely based). Assessment of the number and nesting of loops, the size of the sorts (and whether they are in memory or on disk), and other such considerations can all have a dramatic effect on runtime. Many people, even experienced developers, liken interpreting these plans to reading tea leaves.